Technical Overview¶

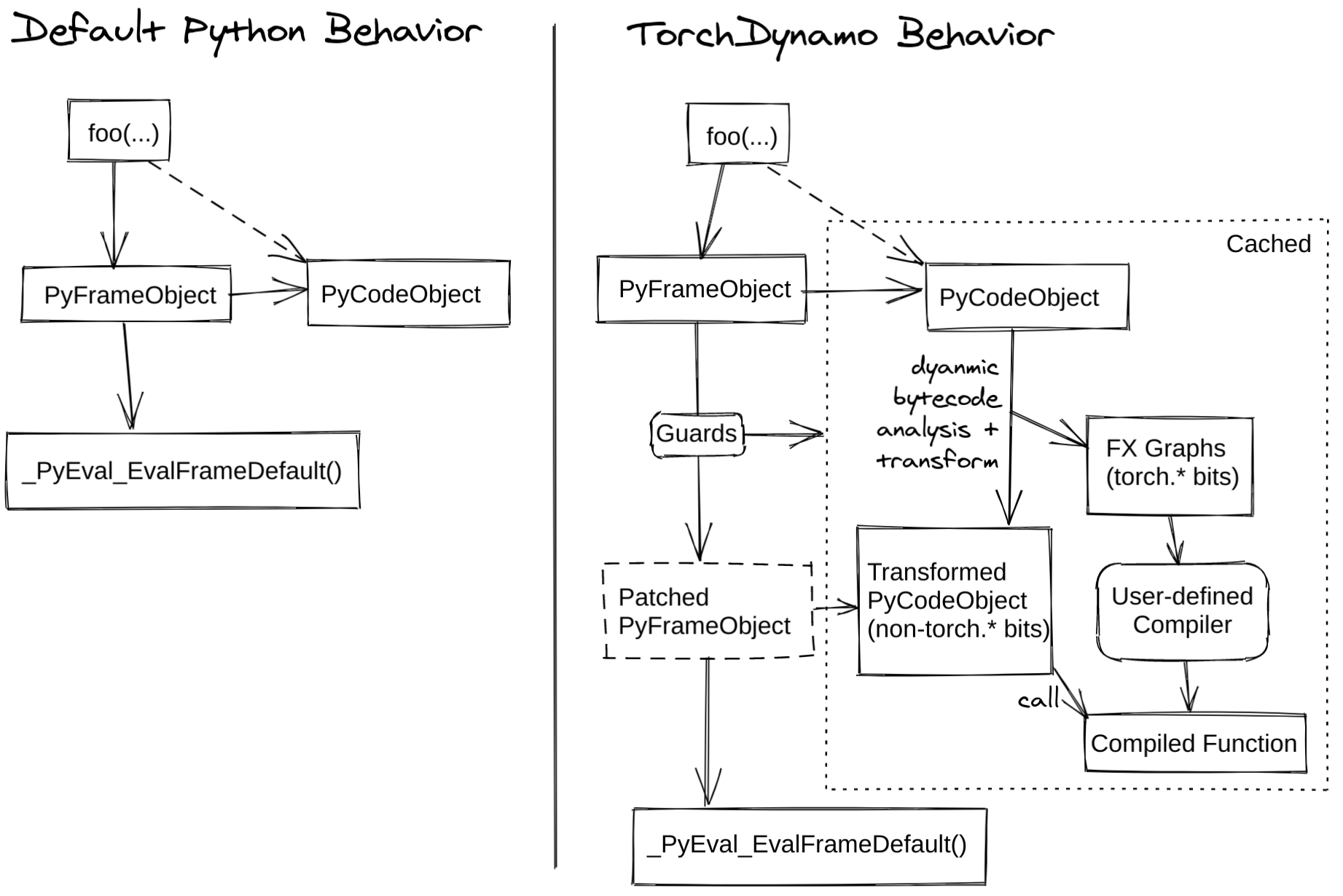

TorchDynamo is a Python-level JIT compiler designed to make unmodified PyTorch programs faster. TorchDynamo hooks into the frame evaluation API in CPython (PEP 523) to dynamically modify Python bytecode right before it is executed. It rewrites Python bytecode in order to extract sequences of PyTorch operations into an FX Graph which is then just-in-time compiled with a customizable backend. It creates this FX Graph through bytecode analysis and is designed to mix Python execution with compiled backends to get the best of both worlds — usability and performance.

TorchDynamo makes it easy to experiment with different compiler

backends to make PyTorch code faster with a single line decorator

torch._dynamo.optimize() which is wrapped for convenience by torch.compile()

TorchInductor is one of the backends supported by TorchDynamo Graph into Triton for GPUs or C++/OpenMP for CPUs. We have a training performance dashboard that provides performance comparison for different training backends. You can read more in the TorchInductor post on PyTorch dev-discuss.